Evolving Our Assessment & Future Guiding Principles Workshop Report (2023)

On this page

Skip the menu of subheadings on this page.Background and Objectives

1. The Committee on Toxicity of Chemicals in Food, Consumer Products and the Environment (COT) held a workshop to start work on updating their guidance on toxicity testing and its supporting principles. The starting point for the process is to use existing frameworks and guidance but with the aim of introducing innovative improvements where appropriate.

2. The workshop aimed to identify areas where guidance needs to evolve and included reviewing fundamental risk assessment principles, current guidance on risk assessment and what can be learned from it, integration of new approach methodologies, exploring hazard vs risk and weight of evidence.

3. The overall objective was to discuss how the Committee moves forward in a new era of risk assessment.

Workshop Overview

4. The workshop took place in May 2023 in London, United Kingdom. The workshop included themed sessions consisting of short flash presentations followed by roundtable discussion. The four sessions were: Where are we at; What we need to improve; How to achieve; and Looking to the future - moving forward.

Introductions and aims of the day: Objectives and Overall Principles

5. Professor Boobis introduced the workshop, set the scene by outlining the objectives and gave an introduction to the COT, its guidelines and principles.

6. The COT was established in 1978. The COT is a committee of independent experts that provides advice to the Food Standards Agency (FSA), the Department of Health and Social Care (DHSC) and other Government Departments and Agencies on matters concerning the toxicity of chemicals in food, consumer products and the environment. Even though the Committee largely advises on chemicals in food, the COT has a wide remit and advises various government departments on matters including consumer products such as e-cigarettes. The remit is so broad that in future, the committee may want to revisit and clarify it, as some of the topics are covered under the remits of other scientific advisory committees. The Committee covers such a wide range of chemicals that, in principle, essentially any chemical that humans are exposed to comes under its terms of reference.

7. The methodologies and approaches needed for risk assessment should be broad and flexible to cover the variety of topics and questions the COT may be required to address.

8. The current COT guidance, which was called “guidelines” at the time of preparation, was published in 1982, and has never been updated since. There is a lot of text regarding animal husbandry and different test types. This has now been superseded by standardised test guidelines that COT adopt or fully endorse, which are utilised elsewhere.

9. The focus for the workshop should be on the principles. The experts that put together the 1982 general guidelines were quite foresighted stating for example: “It would be undesirable to draw up an inflexible list of tests which need to be carried out on every chemical” as well as the importance of exposure assessment: “Consideration of the safety-in-use of a particular chemical should be based on the likely routes, levels and duration of exposure in man and on comparative pharmacokinetic and metabolic data, if available”. Interestingly, the rationale for the use of animals is mentioned: “The results of studies in animals normally form the major part of the evaluation... Although this is not an ideal situation, the use of animals for toxicity testing provides the best means currently available” highlighting that it was using the best available science at that time. Mode of action was also introduced “It is now recognised that a variety of non-specific factors may influence the incidence of neoplasms in aged animals…evidence to support this possibility should be sought” highlighting that the guidelines have stood the test of time. Other chapters were mentioned such as toxicological data requirements.

10. Since the guidelines were published, there has been a lot of work in the area of risk assessment and a lot of developments, not just in technology but also in the way evidence synthesis, modelling, integration of evidence, and systematic reviews are approached.

11. The speaker then emphasised there was no need to reinvent the wheel and existing frameworks like the World Health Organisation (WHO) Principles and methods for the risk assessment of chemicals in food (2009) and the European Food Safety Authority (EFSA) Guidance and other assessment methodology documents should be used or adapted as necessary.

12. The risk analysis process from the general principles document was then discussed, which includes risk assessment, risk management and risk communication. Problem formulation will be the key interface between the scientific advisory committee (SAC) and risk management i.e., is the right question being asked to be able for the SAC to give meaningful advice.

13. Professor Boobis then gave personal thoughts and recommendations on some areas of the guidance that might require consideration, which included identifying points of departure (POD), including modelling aspects, derivation of health-based guidance values (HBGVs), uncertainty factors and new approach methodologies (NAMs) when not using traditional animal data. The whole area of mode of action and adverse outcome pathways (AOPs) has evolved, with assessment of the key biological steps through to an adverse outcome using a variety of methods, including in silico approaches to micro-physiological systems, and this needs to be taken into consideration. The COT / FSA ongoing roadmap on NAMs has highlighted these areas and how to extrapolate or determine a HBGV, the relationship between adversity and non-adverse outcomes as well as exposure duration profiles should be considered. Finally, it will be important to consider how the guidance will address exposure assessment and the influence of the microbiome as well as evidence integration.

14. Professor Boobis concluded that this is the start of a process of data interpretation and that the guidance and general principles should be pragmatic where possible to ensure their longevity.

Session 1: Where are we at? The good, the bad and the ugly

What do we currently have (what is the EFSA process/guidelines), how it is evolving?

Chemical Mixtures

15. Professor Christer Hogstrand presented on the assessment of chemical mixtures. The speaker started off by stating the areas of relevance for EFSA:

- Human Risk Assessment which includes regulated products, food additives, feed additives, food contact materials, pesticides and contaminants in food and feed chain.

- Animal Risk Assessment which includes pesticides, feed additives and contaminants in feed.

- Ecological Risk Assessment which includes pesticides, feed additives and contaminants in food and feed chain.

16. The speaker discussed harmonisation of the different methodologies and processes in different areas, noting these were similar and could be applied to chemical mixtures across the different areas of risk assessment process.

17. EFSA have created some guidance documents: Guidance on harmonised methodologies for human health, animal health and ecological risk assessment of combined exposure to multiple chemicals and Guidance Document on Scientific criteria for grouping chemicals into assessment groups for human risk assessment of combined exposure to multiple chemicals.

18. It was discussed that for combined chemical risk assessment, grouping is the hardest part.

19. The way EFSA was dealing with interactions (synergism or antagonism) was to apply an additional uncertainty factor only if there were indications of possible interactions at relevant exposure levels.

20. The size of the factor used should be determined on a case-by-case basis depending on the strength of the evidence for the presence or absence of interactions, the expected impact of the interactions and the level of conservativeness in the assessment.

21. Most of the risk assessment was based on an overarching framework for chemical risk assessment consisting of problem formulation, exposure assessment, hazard assessment and risk characterisation.

22. There were a couple of ways to conduct mixtures risk assessment: Whole mixtures approach; consider the mixture as if it was a single chemical. This is thought to be a sensible way of doing things, the idea being that people are exposed to the mixture so essentially exposed to an individual entity, but it is complicated. There are several problems and challenges with this approach. Examples include: defining what the chemical mixture is; how do you know that the chemical mixture sold currently as a product is the same as the one generated 5 years ago? are there different ratios between the different components? At a low concentration one chemical substance could have effects by itself but you could miss the effects if tested as a whole mixture. Therefore, the most scientific approach was a component-based approach. You assess each of the different components and then bring the information on them together and add them up in the risk characterisation together with exposure assessment.

23. For each of the steps in the risk assessment you apply a step wise approach.

24. The stepwise approach for hazard identification and characterisation using a component-based assessment is guided by dos/ don’ts to be considered as part of the process (Cattaneo et al., 2023).

25. Step 1: risk assessors have the opportunity to confirm or refine the initial grouping of chemicals performed at the problem formulation stage. If needed, a refinement can be made using WoE approaches, dosimetry (toxicokinetics) or mechanistic data (i.e., Mode of Action (MoA and AOP).

26. Step 2: the relevant entry tier for the assessment is decided based on the purpose of the assessment and the available data. Hazard information is collected for each individual chemical and may include toxicity data, reference points, reference values, mechanistic data, toxicokinetic information and relative potency information. In case of data-poor situations, a list of possibilities to fill data gaps is identified.

27. Step 3: assesses the evidence for independent action between individual chemicals of the assessment groups and the potential for interactions. Within step 3, the most appropriate approach for risk characterisation is defined.

28. Step 4: for each individual component of the assessment group, reference point and uncertainty factors are derived to obtain appropriate reference values through the relevant tier. Such reference values can be used for individual components of the whole group (equivalents of an index chemical).

29. Step 5: summarises the hazard metrics for individual components and lists assumptions and uncertainties.

30. The speaker then discussed the Guidance Document on Scientific criteria for grouping chemicals into assessment groups for human risk assessment of combined exposure to multiple chemicals.

31. Whenever available, AOP information should be used to define assessment groups and for grouping chemicals (OECD, 2018).

32. There can be different Molecular Initiating Events (MIE) that need to converge into key events then end up as a specific AOP. Chemicals that share a common adverse outcome and for which their AOPs are known, should be grouped together in the same assessment group. This approach, which embraces a range of chemicals covering one or more different AOPs, may involve chemicals that trigger: the same AOP by interacting with the same MIE; separate AOPs which then converge at any intermediate key event; an AOP which leads to the same adverse outcome without converging at intermediate key event from other AOPs.

33. The EFSA Committee noted that these three categories include all chemicals with the same adverse outcome but distinct MIEs, thus having comprehensive mechanistic understanding. Such mechanistic information anchored to a Mode of Action (MoA), AOP or its related network allows the uncertainty of the chemical grouping to be reduced. However, if the available evidence indicates that chemicals with a common MoA do not contribute to the combined effects based on exposure and potency considerations, these may be excluded from the final assessment group.

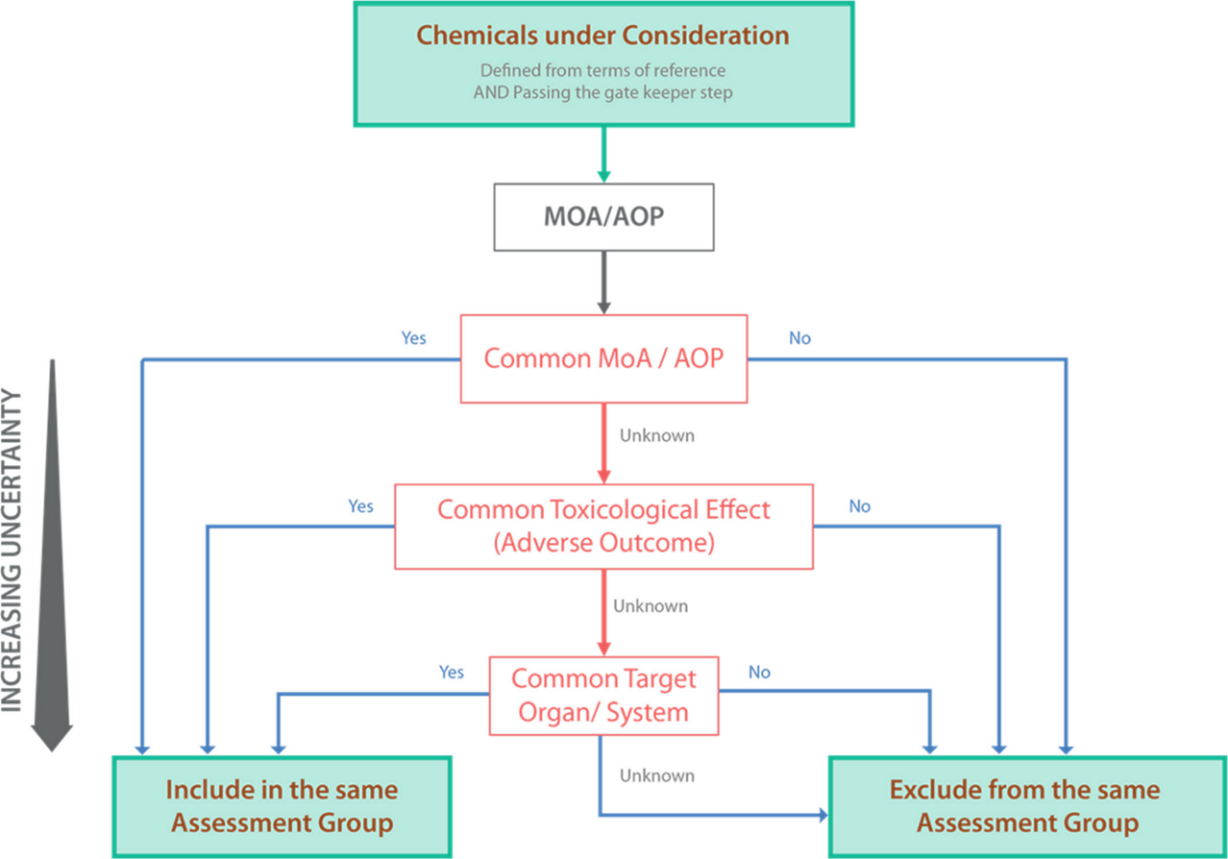

Figure 1. Top-down hierarchical process for grouping chemicals into assessment groups using hazard-driven criteria. The thickest arrow indicates the gold standard hazard-driven criteria (MoA/AOP) with the lowest uncertainty.

34. The scheme (Figure 1) that was created is a hierarchical framework to apply hazard-driven criteria for the grouping of chemicals into assessment groups using available mechanistic information (MoA or AOP) as the gold standard. The top-down hierarchical framework supports generating such mechanistic data and reducing uncertainty in the grouping process.

35. One of the challenges of this approach is that one receptor leads to several critical effects. MIEs that have divergent outcomes, e.g. activation of the Aryl Hydrocarbon Receptor (ARH) by dioxin and compounds with dioxin-like toxicity can then lead to a whole range of outcomes and for some of them we know the AOP and for some of them we know little to nothing. Nevertheless, as they share the same MIE, the adverse effects are related, and it can be argued that they should be grouped even if we don’t have data on the same adverse outcome for the different members of this group. Therefore, it would be a case-by-case basis decision.

36. The speaker then described another issue using the example of Perfluoroalkyl and Polyfluoroalkyl Substances (PFAS). EFSA assessed the effects of the chemicals based on epidemiological studies showing apical effects and no knowledge of AOP, only that there is an effect for a group of chemicals. This was the case for the EFSA Risk to human health related to the presence of perfluoroalkyl substances in food perfluoroalkyl substances in food. The general conclusions were presented:

- “It was considered that similarities in chemical properties and effects warrant a mixture approach for a number of PFASs…. Also, in terms of effects, these compounds in general show the same effects when studied in animals.”

- “Based on several similar effects in animals, toxicokinetics and observed concentrations in human blood, the Panel on Contaminants in the Food Chain (CONTAM) decided to perform the assessment for the sum of four PFASs: Perfluorooctanoic acid (PFOA), Perfluorononanoic acid (PFNA), Perfluorohexanesulphonic acid (PFHxS) and Perfluorooctane sulfonate (PFOS)…Equal potencies were assumed for the four PFASs included in the assessment.”

- “Based on available studies in animals and humans, effects on the immune system were considered the most critical for the risk assessment…. Since accumulation over time is important, a tolerable weekly intake (TWI) of 4.4 ng/kg bw per week was established.”

37. The problem in the speaker's personal view was that the studies were done in the where individuals were exposed not only to PFAS but to a whole range of chemicals from the available food. Although this was a problem with the Faroe Islands study, there was another study from Germany, which supported the same effects (Kotthoff et al., 2020). Effects on the immune system were the critical effects and this was probably because the molecules resemble lipids, acting as inflammatory agents, but the AOP or MoA associated with the critical effect on antibody titre against different vaccines was unknown. Again, the speaker emphasised that this was his personal view.

38. Moving forward, EFSA were aiming to develop and implement a New Approach Methodologies (NAMs)-based Integrated Approaches to Testing and Assessment (IATA) for exploring the mode of action for the observed immunosuppressing effects and for addressing immunotoxicity of PFAS, such as PFOS and PFOA, including the assessment of a common MoA and differences in potencies.

Weight of Evidence

39. Professor Maged Younes presented on Weight of Evidence (WoE).

40. EFSA Guidance on the use of the weight of evidence approach in scientific assessments was developed by EFSA, together with guidance documents on Biological Relevance and on Uncertainty, with the aim of increasing the transparency of scientific assessments. WoE provides a systematic way to assess and integrate available scientific evidence addressing an assessment question.

41. The term WoE is often used colloquially to emphasize that all the evidence, confirmatory and/or contradictory, was taken into account in reaching a conclusion on an assessment question. The approaches and methods used in conducting such ‘non-formalised’, inherent, weighing of the evidence are mostly not spelled out However WHO’s (2009) definition of WoE is: “a process in which all of the evidence considered relevant for a risk assessment is evaluated and weighted”.

42. In conducting a transparent weight of evidence assessment, the following is required:

- A systematic, transparent way of collecting, assembling, and synthesizing the overall evidence.

- Systematic review methodologies to the extent possible.

- Clear qualitative and quantitative evidence is required.

Clear description of the approaches to weighing and assembling the evidence.

- Clear reporting of data and methodologies.

- Considerations of data quality and assessment of causal relationships.

43. Working definitions were then discussed. Weight of evidence is the extent to which evidence supports possible answers to a scientific question. This is reached by assessment of the balance of all evidence and can be expressed qualitatively or quantitatively. The evidence is systematically assessed in such a way that individual pieces of evidence (distinct element of information) are assembled into lines of evidence (a set of evidence of similar type). At each step, the evidence is weighed against relevance, reliability and, at higher tiers of integration, consistency.

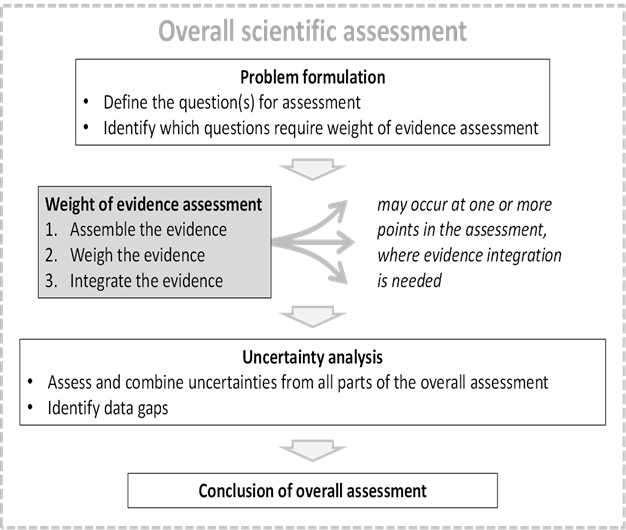

44. WoE assessment for scientific assessment as a 3-step process was then explained (Figure 2). The question to be addressed by each weight of evidence assessment is defined by problem formulation, which is a step preceding the scientific assessment as a whole. This consists of defining the question(s) for the assessment and identifying which questions are required for the WoE assessment. WoE steps are assembling, weighing and integrating the evidence. The output of WoE assessment feeds either directly or indirectly into the overall conclusion of the scientific assessment. Although WoE assessment itself addresses some of the uncertainty affecting the scientific assessment, a separate step of uncertainty analysis is still needed to take account of any other uncertainties affecting the overall assessment.

Figure 2. Diagrammatic illustration of weight of evidence assessment as a 3-step process which may occur at one or more points in the course of a scientific assessment.

45. The relationship of relevance (including biological relevance), reliability and consistency are the three basic steps of WoE assessment.

46. Reliability is the extent to which the information comprising a piece or line of evidence is correct, i.e., how closely it represents the quantity, characteristic or event that it refers to. This includes both accuracy (degree of systematic error or bias) and precision (degree of random error).

47. Relevance is the contribution a piece or line of evidence would make to answer a specified question, if the information comprising the evidence was fully reliable. In other words, how close is the quantity, characteristic or event that the evidence represents to the quantity, characteristic or event that is required in the assessment. This includes biological relevance (EFSA, 2017) as well as relevance based on other considerations, e.g., temporal, spatial, chemical, etc.

48. Consistency is the extent to which the contributions of different pieces or lines of evidence to answering the specified question are compatible.

49. It was stated that there are no prescriptive approaches to WoE so soft titles were used. For example: Listing evidence, best professional judgement, causal criteria, rating, and quantification. Criteria have been defined to facilitate the choice of the WoE method(s) to be applied. These include the availability of guidance, the expertise needed, the ease of understanding for the non-specialist, the time needed, as well as the transparency and reproducibility of the methods, and aspects of variability and uncertainty.

50. Then the speaker discussed the classification of approaches adapted from Linkov et al 2009.

51. Listing evidence:

- Presentation of individual lines of evidence without attempt at integration.

- Best professional judgement: Qualitative integration of multiple lines of evidence.

- Causal criteria: A criteria-based methodology for determining cause and effect relationships.

- Rating: assess and integrate based on several factors, often derived from the Bradford-Hill considerations (e.g. International Agency for Research on Cancer (IARC), Occupational Safety and Health Administration (OSHA), Office of Health Assessment and Translation (OHAT).

- Quantification: Various statistical methods including meta-analysis.

52. Overall, the steps of a weight of evidence approach are the following:

- Assemble the evidence: Identify potentially relevant evidence (consider data gaps); select the evidence to be included; group into lines of evidence.

- Weigh the evidence: assess reliability, relevance and consistency; decide on method(s) for weighing and integrating the evidence (qualitative, quantitative, several); apply chosen methods and summarize results in a form allowing integration.

- Integrate the evidence: Consider the conceptual model for evidence integration to be applied (e.g. combining evidence with differing weight); assess consistency of the evidence (e.g. compatibility of lines of evidence); apply methods chosen for integration; develop conclusion for WoE assessment (summary of results, the way the conclusion will be expressed; and procedure for expert judgement if applied).

- Uncertainty and influence assessment.

- Potential for iterative refinement.

53. Reporting the outcome of a WoE assessment should cover all relevant information, in particular the approach to collecting and assembling the data, the type of data selected, the lines of evidence identified, and the method(s) used for evidence integration, Finally, uncertainty and variability issues should be addressed.

Benchmark Dose Modelling

54. Professor Þórhallur (Thor) Ingi Halldórsson presented on benchmark dose (BMD) modelling and the EFSA Guidance on the use of the benchmark dose approach in risk assessment.

55. BMD modelling in simple terms is two-dimensional curve fitting. A Benchmark Response (BMR) is defined as corresponding to a low but measurable change in response reflecting adversity. The lower confidence limit of the estimated change is then used as a reference point (RP).

56. There are multiple advantages in using a reference point derived using the results from the whole experiment (dose-response) instead of a simple pair-wise comparison (as used to determine the no observed adverse effect level (NOAEL).

57. EFSA has updated its guidance on BMD modelling over the years, with the first guidance being published in 2009, then updates in 2015 and 2022, respectively. In the 2009 guidance, the aim was to assess "the utility of the benchmark dose (BMD) approach, as an alternative to the traditionally used no observed adverse effect level (NOAEL) approach”. The conclusion was the BMD approach is of value…“in situations where the identification of a NOAEL is uncertain; when providing a reference point for margin of exposure (MOE) and a dose-response assessment using epidemiological data”.

58. In 2015, EFSA updated their guidance. It provided much more information on how to apply the BMD approach (excluding human studies). Model averaging is recommended as the preferred method of estimating the BMD and its confidence interval. The use of BMD modelling was not only recommended but the use of the NOAEL was strongly discouraged.

59. The speaker then discussed the BMD approach and the use of the NOAEL, picking up the main points from EFSA, 2015 as follows: “the benchmark dose approach is a scientifically more advanced method compared to the NOAEL approach for deriving a Reference Point (RP)”; “BMDL takes into account the statistical limitations of the data better than the NOAEL”; The Scientific Committee “strongly recommends that the BMD approach is used for the determination of the RPs for establishing HBGVs and for calculating MOEs”.

60. In 2022, EFSA updated its guidance following a workshop organised by EFSA in March 2017 to discuss commonalities and divergences in the various approaches for BMD analysis worldwide, and the update of Chapter 5 on dose response assessment of WHO/IPCS Environmental Health Criteria 240 (WHO, 2020). The Scientific Committee decided to again update its guidance in order to align the content of the document and harmonise further EFSA’s approach with those of its partners.

61. The main changes are a shift from a frequentist to the Bayesian approach, a more extended set of models for model averaging; the normal distribution is now “finally” included to harmonize with other agencies, and it is more open to the use of alternative solutions when limited dose response information can be extracted. It was noted that The United States Environmental Protection Agency (EPA) have 10 different empirical conditions to flag “questionable” model outputs.

62. It is important to note that the NOAEL is still a method available as a last resort.

63. In summary, the current EFSA guidance on BMD methodology has been aligned with other agencies (US-EPA, WHO); the methodology and its computational platform has been implemented “close to perfection”, however there is always a “but”. BMD modelling may not always be the most sensible solution, but it should be one of several options in the toolbox. This conclusion is supported by “a patchwork of conditions” found in the guidance to eliminate exceptions.

64. The series of guidance updates by EFSA have resulted in relatively well thought through guidance on how to perform BMD modelling. It is currently difficult to see how the modelling aspect can be improved further, although the computational platform could be improved, and the underlying assumptions could be made more transparent.

65. EFSA recommends the use of BMD modelling by default and strongly discourages the use of the NOAEL approach. However, models cannot extract more information than the underlying data contains.

66. Experiments with few dose groups (n=3-5) and few animals may not always contain the level of information that the risk assessors need. Future guidance should allow for alternative approaches giving more weight to common sense along with statistical considerations.

Registration, Evaluation, Authorisation and Restriction of Chemicals (REACH)

67. Mr Keith Bailey talked about REACH in the regulatory context and some of the thing’s COT might want to take into account in its guidance.

68. The speaker first introduced REACH Article 1. The purpose of this regulation is to ensure a high level of protection of human health and the environment, including the promotion of alternative methods for assessment of hazards of substances, as well as the free circulation of substances while enhancing competitiveness and innovation.

69. This regulation is based on the principle that it is for manufacturers, importers and downstream users to ensure that they manufacture, place on the market or use such substances that do not adversely affect human health or the environment. Its provisions are underpinned by the precautionary principle.

70. It is important to note that this is the paradigm shift of REACH; responsibilities fall on both industry and the regulator. Pre-REACH, when new substances were put on the market it was the responsibility of industry to do the risk assessment before those new products got onto the market. For existing substances, the regulator did the risk assessment. Now everything is put on the same footing, and the responsibility primarily lies with industry. Therefore, it is important to flag that guidance needs to work for industry not just regulators.

71. REACH primarily works at the level of the individual substance but industry and regulator risk assessment can be carried out based on groups of chemicals. Industry may need to consider the chemicals they are producing as a group and think about how to do so. The regulator can view it different ways, grouping by substance or by similarity of use. Some examples include CMRs (CMRs are substances that are carcinogenic, mutagenic, or toxic to reproduction), textiles, tattoo inks.

72. Identification of hazard and assessment and management of risk are threads that run throughout REACH.

73. Next the speaker discussed the responsibility of the manufacturer / importer in chemical safety assessment, which applies only to substances to be put on the market at a level above specified tonnes per year.

74. The purpose is for manufacturers and importers to assess and document that the risks arising from the substance they manufacture, or import are adequately controlled during manufacture and their own use(s) and that others further down the supply chain can adequately control the risks.

75. REACH is looking at individual substances as compared to regulatory regimes which start with an end product. A lot of information that REACH is looking for is designed to answer questions regardless of what the end product is.

76. Step 1: hazard assessment (human health, phys-chem, environmental, Persistent, Bioaccumulative and Toxic (PBT) / very Persistent and very Bio-accumulative (vPvB).

Step 2: where the hazard assessment identifies specified hazard classes or assesses the chemical as PBT/vPvB it must be followed by exposure assessment (exposure scenarios & exposure estimation) followed by risk characterisation, which considers the human populations and the environmental spheres for which exposure to the substance is known or reasonably foreseeable and the overall environmental risk.

77. The chemical safety assessment is written up in the Chemical Safety Report and submitted as part of the registration dossier.

78. It is for manufacturers, importers, and downstream users to ensure that they manufacture, place on the market or use such substances that do not adversely affect human health or the environment.

79. For the vast majority of chemicals, the duty to carry out risk assessment lies only with industry, which covers roughly about 5,000 substances.

80. The role of the regulator: Health and Safety Executive (HSE) compliance-check a proportion of registration dossiers, which is around 100; registration compliance-check a minimum of 20%, checking the quality of the work, but they do not re-do the risk assessment.

81. Processes where there is an element of regulator risk assessment: Substance evaluation, prioritisation for authorisation, restriction, are there any gaps? if so, the regulator would then require more information from industry. Risk management option analysis (non-statutory), such as Classification, Labelling and Packaging is also required.

82. Granting of authorisations is back to regulatory scrutiny of industry applications.

83. REACH registration is based on standard test methods such as Organization for Economic Cooperation and Development (OECD) Test Methods, which would be added into REACH Annexes. It is important to distinguish between the standard test methods and what is necessary and appropriate for a particular chemical.

84. REACH Annexes have seen changes to standard test methods for some endpoints with replacement / reduction of animal testing. REACH has seen a lot of refinement in animal testing over the years.

85. Therefore, it is a case-by-case consideration: endpoint-specific or general waivers, last resort principle, testing proposals, regulatory requirements from compliance checking or substance evaluation and even using the term weight of evidence. One would have to justify why such a chosen methodology has been used. However, who demonstrates efficacy in these cases? Standard test method OECD validation.

86. There will be challenges in the resources available to assess the efficacy of any NAMs proposed.

Roundtable Discussions from Session 1

What could work better?

87. It was considered that EFSA may use multiple uncertainty factors, which can be problematic and difficult to justify. Therefore, this should be something to be avoided, if possible, in the new COT guidance, which should make every effort to replace these uncertainty factors with data-derived factors. However, avoidance of uncertainty factors will require additional knowledge than perhaps we currently have.

88. More transparency is needed in how the COT performs its risk assessments, possibly necessitating a way to formalise its way of working, and then integrating this into a compatible guidance that is already available.

89. The COT needs an overarching guideline/guidance document but there is reluctance to develop its own framework; it would be preferable to adopt existing guidance. However, there is uncertainty on which would be the most applicable and / or if possible, whose choice and what criteria need to be fulfilled in order to determine which sections would be appropriate to select.

90. There needs to be a clear distinction between who is responsible for what in the different sister committees and SACs involved in chemical risk assessment more generally.

91. The guidance should allow for the use of grouping and weight of evidence. A brief discussion was had about what sources should contribute to WoE, leading on from the earlier presentation. The points made were that the evidence used must be based on scientific fact, and sources of opinion, such as social media, would not be appropriate. However, it was accepted that evidence can arise from less typical sources and still contribute towards WoE.

92. Resourcing will be key to use these new tools. Therefore, there should be dialogue between the regulator and industry to encourage harmonization.

How does COT guidance fit with other guidance?

93. It was suggested there should be a review of what other guidance is currently available that represents good practice, before determining what could be learnt from this guidance and then consider what would be appropriate for Great Britain (GB) specifically. It was noted there is a lot of similarity between guidance from different bodies, therefore by reviewing all of the guidance available, the COT could decide which aspects would and would not work for GB. It was indicated that harmonisation across guidance would be preferable and while the implications of being too divergent from the European Union should be considered, this chance to review guidance from a GB perspective should be seen as an opportunity for GB to identify gaps and become a leader from a regulatory and industry point of view.

94. Harmonisation and lessons learnt from this other guidance could be applied to any GB guidance.

95. The guidance could be re-written for applicants for the various regulated product areas and subsequent committees and JEGs. It was stated that re-writing of the guidance to make it more relevant and bespoke for GB applications was a lower priority and currently committees do not have the time to discuss this whilst there are still large numbers of dossiers to be evaluated.

What other guidance is out there?

96. Guidance’s suggested from which GB could learn include Health Canada, European Chemicals Agency (ECHA), WHO and General Data Protection Regulation (GDPR).

97. It was agreed that information was readily available and easy to access, meaning individuals could select appropriate guidance to use, based on their need at the current time.

98. It was questioned whether the other guidance would be applicable only to the COT, with agreement that any new guidance should work for the COT sister committees; Committee on Mutagenicity (COM) and Committee on Carcinogenicity (COC) as well as SACs advising other government departments.

Can priorities be identified?

99. Concerns were raised that should the GB guidance differ too far from guidance from other existing bodies industry would decide to work to EFSA or USA guidance over GB. However, it was considered GB should use good practice and be seen as a leader in the field. It was added that, from a scientific point of view, the principles behind the guidance are (and should be) similar across existing bodies, and this exercise was more about what could be added to GB guidance so that it was tailored towards what works best for GB specifically. Emphasis was placed on the GB guidance working in the most beneficial way to GB.

100. It was agreed that GB should liaise and build relationships with other national agencies, especially countries with their own guidance such as Canada and Australia. It was highlighted that Health Canada in particular had done a lot of work on AOPs which may be useful for GB guidance.

101. Members asked whether more quantitative methods be incorporated, and how could dose-response be incorporated.

102. It was stated that a useful development would be to produce a skeleton of how assessments could be done at the COT.

103. It was stated that it would be important to define the scope of the guidance because the remit of COT is so broad, so any new guidance may also have implications for other government departments such as HSE and the Department for Environment, Food & Rural Affairs (DEFRA). Therefore, the approach should be harmonised across these different GB agencies so that any advice can be directly compared. In light of this, it was further queried whether this should be a COT decision at all and if so, how far would COT’s remit extend. It was suggested that, given the number of departments and committees this guidance could affect, that perhaps this was beyond the scope of COT and that perhaps this task sits above one individual department/agency. Concerns were raised that a lot of resource would be required in order to reorganise the system, and an alternative government body taking responsibility for the entire system restructure might be better.

104. There was discussion on how NAMs may be incorporated into the guidance. Their relevance for human toxicity, and if/how wholesale replacement of more traditional approaches could be affected in practice. NAMs, for instance, can throw up unknown areas of concern, and potentially identify non-apical endpoints that are difficult to interpret. Such uncertainty can be a discouraging factor for industry to use NAMs in regulated product applications and it was suggested that in the SACs’ dossier evaluations a less stringent evaluation could be given to such studies. It was stated that animal studies will not be removed entirely, but animal data should be used better. A lot of important NAMs approaches still rely on animal data but do so with new approaches. Indeed, it was concluded that no one size fits all, and relationships or correspondences between NAMs outcomes and those in more traditional toxicology studies, epidemiological studies, and biomarker studies should be identified and pursued (e.g., using NAMs to fill the data gaps from the animal studies). There is a need to move towards more AOP driven approaches. It was stated that toxicological evaluations relating to NAMs are in a different place than for example 10 years ago. Although academics may have been overly optimistic about the prospects of NAMs, there has been a shift and NAMs are currently functioning in more appropriate areas.

Other topics raised

105. The question was raised of whether regulation should follow science rather than other way around. Evolving regulations gradually over time is a good approach but could be confusing and require too much work. A descriptive remit may be beneficial but could also be too specific. Overall, risk assessments and guidance should be science led, not stipulate specific test requirements, but adapt as the science changes.

106. It was discussed how the Advisory Committee on Novel Foods and Processes (ACNFP) are still following the EFSA guidelines when evaluating novel foods, and this may not always be the best way forward. For instance, these are products intended for direct consumption and in animal studies overt toxicity is rarely seen, standard 90-day studies use large numbers of animals, and some studies that are submitted are of a low quality (e.g., university final year PhD studies that are not fit for purpose). It was questioned if this comprehensive toxicology evaluation approach should be actively discouraged? An important issue that was raised in this respect, and again in session 3, was the distinctions between different chemicals based on their intended (and unintended) uses, and if/how their evaluation should be reflected in guidance. With respect to REACH, for instance, it was suggested that this approach is fundamentally needs-based and not hazard-based; it is based around volumes of chemicals.

107. Regarding threshold, members discussed that there may not be a practical threshold, but that for genotoxicity specifically there must be a threshold. It was stated that it can be difficult to identify such thresholds.

108. The BMD approach is a considerable improvement on what had been done before. This was then linked back to genotoxicity where it was stated that there are no suitable tests to allow the dose response to be adequately characterised and that it would not be possible to quantify the risk.

Session 2: What we need to improve?

Building regulations

109. Dame Judith Hackitt presented on building a safer future: changing an industry culture; the need for Leadership, Pride, Professional Competence and an effective Regulatory Framework.

110. Although Dame Judith’s talk covered a very different area to that of chemical risk assessment, it was noted that it is useful to get someone from outside to look in and give an objective assessment at a fairly high level at whether a system is working.

111. The speaker then gave a timeline of the Grenfell Inquiry. In July 2017, a public inquiry was announced, and it is still ongoing. In August 2017, an independent Review of Building Regulations and Fire Safety (the briefing being What went wrong? And would this affect other areas?) started and the final report was published in May 2018. In July 2018, an Industry Safety Steering Group was established and in late 2019 a new Building Safety Regulator was announced. In July 2020, a draft Building Safety Bill was published for scrutiny; April 2022 Building Safety Act received Royal Assent. The detailed publication is due next year.

112. The speaker then discussed that they had aimed to ensure that the process gets followed through and delivered in the right way. The need for continuity is key and clarity of purpose in the convoluted process of getting a new regulatory system up and running and delivering the right culture. The regulatory system is there for a purpose. It is there to change behaviours, to deliver the right behaviours and to deliver the right outcomes. If you focus only on regulation and don’t think of the people’s behaviours that are being driven by that regulation, we don’t focus on the right things.

113. We need to think about transparency in the information being submitted or omitted.

114. The process of the system was already complicated but also many other things happened in the world such as Brexit, Covid 19, massively increased awareness of climate change, political uncertainty which complicated the process even more and made priorities change over time. Therefore, when you want to make change in a system you need a champion to maintain focus and drive.

115. The speaker discussed how she reviewed the system, which showed that it was broken, not fit for purpose, and needed to be fixed. Especially, she focused on how everything is siloed and not stitched together. The speaker then elaborated on the diagnoses of the problem.

116. It was found that the current regulatory system for ensuring fire safety in high rise and complex buildings is weak and ineffective with little or no enforcement; therefore, a new regulator is needed. The industry behaviour is characterised as a “race to the bottom” with significant evidence of gaming the system. Conflicts of interest abound. The design, change management and record keeping is poor, both during construction, occupation, and refurbishment. In general, the experts, as well as the residents, are not listened to. The culture of the whole construction and built environment industry must change. Product testing, marketing, labelling and approval processes are flawed, unreliable and behind the times; therefore, a new regulator is needed. There is a general lack of assured competence and quality assurance across the sector. There is an unspoken knowledge about the extent of shortcuts being taken without real assessment of potential consequences.

117. Only by standing back did these things became clear. We need to put in place a new regime underpinned by a set of key principles; a systems-based approach to both regulations and to buildings. Competence, accountability, and responsibility should be at the heart of the system and have the right players in the right system. A culture change with positive incentives for good building practices and those who are willing to stand up and be counted for doing the right thing; and punitive sanctions for those who continue to try to game the system. We need a risk-based, proportionate regulatory framework and an outcomes-based framework to encourage real ownership and accountability. This would be overseen by the regulator with those undertaking building work needing to demonstrate they know what they have and are doing all they can to manage and reduce risk. Focus on delivering quality buildings which are safe and feel safe to live in whether single dwelling or multi-occupancy. Genuine engagement with and concern for residents and rebuilding of trust and reputation.

118. The speaker started to map out how the system was supposed to work. It was complex and it was too complicated for it to work properly, and it provided lots of opportunities to bypass the system. Therefore, a new map was devised that simplified the process which is what the regulatory act is designed to do. We are now moving to a system where responsibilities are clearer, and the driving culture changed. The role of regulation is clear, to hold industry to account including underpinning of 3rd party accreditation.

119. The links and running thread should be quality, sustainability, and resilience.

120. Since the report, there has been progress in driving culture change ahead of regulatory change.

121. Learnings over the last 4 years from the Industry Safety Steering Group, which is driving the needs from the right behaviours from industry, were then discussed. There is progress on all fronts and pace is accelerating now that regulation is in sight. However, too many are still waiting to be told what to do and asking for detail. Industry leadership is emerging but not fast enough and not enough of it. Insurance and financial markets are now alert/sensitised to concerns. All the players involved are contributing to getting the right answer. There are challenges of new build and existing stock, which are different. Voluntary Building a Safer Future Charter is an important development to drive change in the sector. Professional competence is fundamental, and it is not enough to know your own job/trade but you also need to understand its impact on system integrity overall.

122. We must really focus on what we are trying to achieve. The speaker stated that they were trying to make buildings that are fit for purpose, built to the right quality, and maintained at their quality throughout their use, not just at the point that they are finished.

123. A Safety Case regime for higher risk buildings makes people think about what might happen and how to manage consequences. Gateways at design and commissioning stage are needed and actual delivery on what these say.

124. It won’t be enough to say what you intend to build; it must be demonstrated what has been built based on evidence and proof. The supply chain needs to be ready and able to provide data and performance accreditation.

125. Collaboration and data sharing are key so that every single person understands their role in that system and delivers on the overall picture.

126. This can include standardised system(s), performance testing, less substitution, advice on use and application from competent persons, regular integrity checks and “product stewardship”.

127. When you change any regulatory system or review it, it has ripple effects and can drive a culture change. There is always more change to come, the regulatory system is dynamic.

128. This is a once in a generation opportunity to leave the race to the bottom behind and change industry practice for good.

129. An integrated systems-based approach to creating the whole regulatory framework is essential.

Evolving our assessment

130. Dr David Gott presented on a golden thread through past, present, and future.

131. The speaker started with the regulatory toxicology tragedy of thalidomide and how that enhanced and checked our thinking for 50 years. We need to move on from this and now start thinking differently about our approach to safety assessment.

132. The risk assessment paradigm doesn’t exist in isolation. We need to think how to use the best science that will tell you the best answer and then you take other factors into consideration towards the risk assessment process.

133. The speaker then used nanomaterials as an example of evolving guidance.

134. Nanomaterials have been around for a number of years, but we were not specifically considering them. It wasn’t until a number of reports on their potential uses and risks emerged at the start of millennium that the scientific community investigated this. Thereafter, a 2005 joint COT/COC/COM statement outlined principles for evaluation, issues, and major uncertainties. They acknowledged that a lot of problems couldn’t be answered. This then triggered a lot of research. EFSA started its journey on developing guidance, which evolved over a 15-year journey using fundamental principles. EFSA then illustrated how they were going to apply the principles and outlined detailed approaches to evaluate and test nanomaterials.

135. We live through change and will continue to do so. The principles for assessment should be the same, whether we assess something that is added deliberately or is something that is already there, the principles and the science behind them stay the same. The guidance should describe how we use the available information to estimate risk. On the other hand, NAMs should use the minimum data needed to satisfy us on the lack of any meaningful risk.

136. The speaker then discussed the toxicological paradigm and how we should use exposure more and how biological effective dose is still mainly unknown. Population dose response dynamics will be key to protecting the right people because we are all different but normally, we aim for protecting the most sensitive population.

137. The Joint FAO/WHO Expert Committee on Food Additives (JECFA) risk assessment approach was explained. They had the same general principles and methods for all chemical risk assessments. They have published in their reports since the mid-1950s and recommended reviewing the validity of the evaluation procedures then in place in the early to mid-1980s based on good science at the time.

138. The International Programme on Chemical Safety (IPCS) published Environmental Health Criteria monographs (EHCs) on Principles for the Safety Assessment of Food Additives and Contaminants in Food, EHC 70 (IPCS, 1987), and Principles for the Toxicological Assessment of Pesticide Residues in Food, EHC 104 (IPCS, 1990). Essentially, these told you how to interpret the data. Around 2000, IPCS recognised there has been significant advances in chemical analysis, toxicological assessment, and risk assessment procedures.

139. They took particular note of two reviews. of the IPCS Harmonization Project and the Food Safety in Europe project of the European Commission (Barlow et al., 2002; Renwick et al., 2003), which were used to inform the development of a revised monograph EHC 240: Principles for Risk Assessment of Chemicals in Food, which describes general principles and methods for the risk assessment of additives, contaminants, pesticide residues, veterinary drug residues and natural constituents in food.

140. When it came to guidance and what data we might need, the traditional guidance of the Scientific Advisory Committees (SAC) was derived from the 1960s approaches to pharmaceuticals. They emphasised on trying not to miss anything because of previous experiences such as with thalidomide. Therefore, they identified the need for specific toxicological studies and appeared to require them, which at the time meant studies in two species.

141. Then there was an opportunity to reboot, which started with a review of existing guidance and what we now knew. Discussions were on what data were used and what was needed for a decision, recognizing that one size does not fit all. There was a desire to learn from the past and be ready for the future evolving a much more scientific approach.

142. This evolution turned out to be what is now known as a tiered approach (for some categories of chemical, such as food additives), which is about thinking about your data rather than just generating data. Thinking about it on a case-by-case basis. It is about the physicochemical data on the compound, the toxicity data on structurally related compounds and any available information on structure activity relationships. Animal testing was in line with the 3R strategies. This is inherent in the rationale that results of studies at higher tiers will in principle supersede results at lower tiers which was an advantage. The applicants were able to identify relevant data needs more readily, and it allowed adequate assessment of risks to humans and strengthened the scientific basis for the assessment.

143. The tiered approach consists of 3 tiers: testing requirements, key issues and triggers are described. The minimal dataset applicable to all compounds has been developed under Tier 1; Tier 2 testing will be required for compounds which are absorbed, demonstrate toxicity or genotoxicity in Tier 1 and Tier 3 testing should be performed on a case-by-case basis taking into consideration all the available data, to characterise specific endpoints needing further investigation from findings in Tier 2 tests.

144. The speaker accepted that it was not perfect and that it had errors as well as flaws as it required compromise in what people were prepared to contemplate. However, it does balance the data requirements against the risk. It initially uses fewer complex tests to obtain hazard data and evaluated to determine if they are sufficient data for risk assessment or, if not, to design studies at higher tier.

145. Some of the unintended challenges were that they worried about limited experience in what panels would consider sufficient and struggled with the emphasis on integrating information not just test results. Scientists generally welcomed the tiered approach and its emphasis on good science. Others tried to over interpret it and the meaning of words chosen by consensus (and able to be understood by non-native speakers).

146. It was an achievement as it did what is set out to do which was to lower the amount of data required and accept animal use if it was used properly.

147. Integrating the evidence is absolutely key, and the critical part of the risk assessment. We need to identify all the potential hazards and determine if they need characterising and whether the toxicological data internally is consistent with our wider knowledge. It is important to note that every experiment makes design compromises and possibly uses small numbers and high doses so that there is a need to think about possible changes in kinetics.

148. We need to think about using all the data sources such as human studies, animal studies and in vitro studies. These will contribute to dose response information on what the compound does to the body (dynamics) and what the body does to the compound (kinetics), noting that these relationships use models, which may or may not reflect reality. When providing the risk assessment, we have to be honest to risk managers in that we may not know all the answers and point out what is uncertain.

149. The speaker then discussed personal concluding thoughts on the next evolution and future challenges. There needs to be increased emphasis on toxicokinetic information to allow evaluation of internal dose and reduce reliance on default uncertainty factors. There needs to be greater emphasis on integrating and including information from various sources. More thought on relevance of changes for adverse effects in human is needed. We need to start to think about intermediate end points and how to incorporate and use them. There need to be indications of when we can rely on less data or testing.

150. Some of the challenges will be how to incorporate NAMs and omics technologies into our framework. There are lots of roadmaps, perhaps maybe we should be radical and just do it. What should we do about the unknown unknowns as well as uncertainty factors. How much validation will we require. Biology and medicine have a long history of serendipitous findings and good ideas that were based on imperfect knowledge. Do we need to revisit the acceptable daily intake (ADI)/ tolerable daily intake (TDI) concept, as well as revisiting what is an appreciable health risk. Should we be pressing risk managers to ask what is dangerous rather than tell me a safe intake. We need an estimate of the amount of a substance in air, food or drinking water that can be taken in daily over a lifetime without appreciable health risk.

151. Values are not always good estimates of the harmfulness of chemicals.

152. Deciding on what level of risk is acceptable is down to society. We will need to inform this debate with reality and accept what is unachievable. We will need to acknowledge we cannot change some events which will happen to all. Be clear in every choice and decision will have consequences; known and unknown. We know a lot but not everything and in hindsight some things will be wrong due to that. Everyone is an individual who makes choices and it’s important to remember that in future. We might prolong life and quality of life better but there are some things which are beyond our control.

Roundtable Discussions from Session 2

Gaps identified and recommendations for improvement

153. Guidance: The scope of the current guidance/guidelines should be explored and defined. The guidance must emphasize limitations of extrapolation of raw data to observed and expected results. There is a need for a systems-based approach. Tiered testing could be incorporated such as toxicogenomics in vitro, which is considered a conservative approach and appropriate for low tier screening.

154. Frameworks: It was questioned whether a different framework is needed to distinguish between those things that are purposefully added to food and those which occur as contaminants. For example, are different datasets required for exposure assessments? It was discussed that exposure assessments for COT papers tend to be very thorough and should maintain this level of detail and perhaps extend it? The idea of storing data from COT exposure assessments in a centralised accessible format was suggested. Each COT paper contains a lot of information, and ideally risk assessors should be able to easily access and reuse this data as appropriate. It was proposed to have an overarching chemicals framework that would link to various committees and advisory groups for specific categories of chemical.

155. NAMs: The need for a deep-dive analysis on where NAMs have been applied and what indicators could be used to support and/or supplement what may be needed for the guidance (i.e. to prevent loopholes). There is a need to be able to interpret data generated by NAMs (e.g. computational models) as a useful resource for hazard identification. but where to draw the line? Will need to understand the method as well as the limitations of the outputs. We need to ensure that a bigger picture of where NAMs fit in to the regulatory space is kept in mind. It was stated that some people’s experience of NAMs is that they’re difficult to interpret. It is hard to pinpoint specific requirements for a NAMs study. It was also discussed that a basic study data set should not be stipulated. The data should enable hazard characterisation, exposure assessment, and risk characterisation and the guidance should not be a set of check boxes. A key question was whether mechanistic links to adversity are needed? However, it was raised again that defining ‘adversity’ is a key challenge that revolves around use of language in different contexts. The meaning behind the name (New Approach Methodologies) varies, and one definition is not limited to methodologies that are purely in vitro or in silico, but instead takes account of any novel approach. Hence, some NAMs may include in vivo methodologies, for example, you could take blood samples to assess the kinetics (this approach is a compromise) or may include reporter genes for specific stress responses. Testing hypotheses may not be possible without the use of a gold standard method so it may not be possible to fully move away from animal studies (where they are the gold standard) for the foreseeable future.

156. SACs: There is a need to strengthen the link between the three committees (and Joint Expert Groups) to ensure a consistent and harmonised approach. It may be necessary to do this across all Committees that provide advice on chemical risk to the government. Regular transparent communication between each of their activities was also identified as a gap. Noted that there is a limited pool and capacity to recruit new experts.

157. Does the COT need to present a narrative review of all studies, or might a tabular and/or graphical representation be more useful, easier to digest, and informative? Narrative review can sometimes be overwhelming, or conceal the most useful aspects of the paper, the COT therefore needs to work to draw these aspects out, summarise, and present them. It is important to draw a balance between providing systematic reviews and narrative reviews in COT discussion papers and statements. The limits of accessibility requirements were mentioned, and that they may potentially preclude such practices although noted that extensive tables and graphs are fine if accessible alternatives are provided. The suggestion of segmenting papers into more and less accessible sections was suggested.

158. Judging the quality of a published paper is not a tick box exercise. There are more problematic practices in academic publishing, like intentional manipulation of data or the use of paper mills that produce papers that look legitimate, but which may use fabricated data.

159. The Regulators: It is fundamental to capture institutional wisdom. When compared to US departments (e.g. EPA, National Toxicology Program (NTP) and National Institute of Environmental Health Sciences (NIEHS)) there seems to be a good link between them, where responsive research is possible There doesn’t seem to be anything similar in the UK, though the participants were aware of UK Research and Innovation (UKRI). There were challenges of time and resources on how to review large datasets. We will need to develop ways/tools on how to handle large volumes of data (especially in the case of Regulated Products, Trade assessments), and specify available toolkits. There is a need for more “quality” resources for risk assessors, to supervise and lead. Assessors’ need training in how to assess and review all the different types of data, therefore build that into the time frame. An overarching issue is how to deal with different interpretations of the data, so we need to develop a standardised way, but this may become a tick box approach. There is a gap between integration of sustainability, industry, safety, and regulatory aspects of GB chemical regulations/strategies. Purely academic papers don’t necessarily inform work on regulated products, as a lot of these papers omit key information that is not necessary for academic publications. They have different objectives than informing risk assessment so this will be a future challenge to note.

160. Assessment: This discussion raised issues related to HBGVs and their utility/application, the differing interpretations of language in different fields, and the way data presented to the COT may be archived and databased for future use in assessments. There was also discussion about acute exposure vs. chronic exposures and the requisite datasets, and risk assessment for deliberate consumption versus contaminants.

161. The discussion began by considering whether lifetime exposure is a relevant index. For instance, sometimes the ADI is the only tool available, but its use can give rise to overly conservative assessments. This led into a discussion about language, and the meaning of ‘dangerous’ vs ‘safe’, and although a value can be set that indicates no appreciable risk, it does not indicate at what level above this there’s a health concern. It is impossible to exclude that something might be happening. For instance, cadmium exposure is never ‘safe’, but reducing exposures is not always possible. Safety is not a binary scale, with safe and unsafe being the only options, but how this is communicated is difficult.

162. The GB risk assessment system needs a gradual evolution. It was stated that it is currently functioning well, but perhaps clunky due to age and reliance on animal testing.

163. Key issues around uncertainties relating to mixtures and interactions are important to consider going forward, particularly on how chemicals interact and how these interactions can be integrated into risk assessments. It was also stated in this context that getting the problem formulation correct is a crucial starting point.

Session 3: How to achieve?

What is required by Retained EU Law (Revocation and Reform) REUL?

164. Dr Erica Pufall introduced a session on Retained EU law (REUL). Retained EU law is a legal term introduced into UK law under the European Union (Withdrawal) Act 2018. It is a tailored legal concept capturing EU-derived laws, rights and principles retained and preserved in UK law for legal continuity after the transitional arrangements under the Withdrawal Agreement.

165. REUL is all the EU law that was incorporated into domestic UK legislation when the UK exited the EU; all the laws that were needed were copied into UK law, and this became ‘Retained EU Law’. REUL makes up >90% of UK food law.

166. The Retained EU Law (Revocation and Reform) Bill (“REUL Bill”) was introduced into the House of Commons on 22 September 2022.

167. This Bill allows for the revocation or amendment of REUL and removes the special features REUL has in the UK legal systems making it significantly easier to undertake reform in the longer term.

168. In Northen Ireland, under the Northern Ireland Protocol, much of EU food and feed law is directly applicable, rather than being REUL. The FSA are working through any implications of the Bill for Northern Ireland food and feed law.

169. The REUL Bill proposed that what were previously EU laws would be removed from the statute books before 31 December 2023, unless departments and the Devolved Administrations took steps to move them into domestic legislation. If departments did not act to transfer REUL into domestic legislation, it would fall off the statute book at ‘sunset’ of 31 December 2023.

170. On the 10th of May, the UK government tabled an amendment to the Bill to replace the sunset clause with a revocation schedule that lists the REUL to be revoked/sunsetted by departments. This effectively flips the Bill on its head, changing the default position from lapsing REUL to preserving it.

171. This schedule is a list of the retained EU laws the UK Government intends to revoke under REUL at the end of 2023. All other legislation not on the Schedule will automatically become domestic law.

172. The Revocation Schedule includes eight pieces of law the FSA is responsible for. The FSA has carefully examined these eight pieces of legislation and is confident that removing them will not impact on the safety or standards of UK food. Our list of REUL to be revoked can be found here: Retained EU Law to be sunset or revoked by 31 December 2023 | Food Standards Agency.

173. The speaker then discussed what REUL means for the FSA. Retained EU law covers a range of areas relating to food and feed safety. The FSA has long standing ambitions to reform the food and feed regulatory system and the REUL Bill presents a significant opportunity to undertake legislative change to support our wider ambitions for reform. We have ideas on where we can make significant improvements, which we are discussing with industry to ensure we understand and address potential concerns. For example, an area the FSA has already committed to reviewing is the regulatory framework for Regulated Products, including Novel Foods. This will include how the process for approving novel foods could be updated, to create a transparent and effective system that is the best in the world for innovators, investors and consumers and encourages safe innovation in the sustainable protein sector.

174. The FSA Board have agreed five principles to guide our approach to REUL:

- Protecting public health, food safety and standards: we should not make changes which reduce the safety or standards of food produced or eaten in the UK.

- Protecting consumer interests: we should not make changes which are detrimental to the wider consumer interest in relation to food (for example by making food harder to afford).

- Maintaining consumer and trading partner confidence: we should not make changes which are likely to reduce consumer or trading partner confidence in UK food, or which are inconsistent with our international trade agreements.

- Supporting innovation and growth: we should seek to make changes that support innovation, growth and the introduction of new technologies, (including innovation that could help to make food healthier or more sustainable) and remove unnecessary burdens on business.

- Managing divergence: we should, as far as possible, seek consistency of approach across GB, in line with common framework commitments. We will consider the impact of divergence on public health and safety and the wider impact for consumers or businesses of having differing rules across the UK.

HSE Pesticide NAMs Case Study

175. Dr Valerie Swaine presented on the experience with NAMs in Plant Protection Products (PPPs) toxicology assessments in GB (Great Britain).

176. The speaker stated that the HSE definition of NAMs is in silico, in chemico, in vitro and in vivo methods and a combination of these that can provide information on chemical hazards and risks, by replacing or reducing the use of animals.

177. The speaker then discussed PPPs as an example of chemical mixture to which NAMs are being applied. For authorisations of these products a standard “six-pack” of acute toxicology animal studies is required, however Article 62 of the PPP Regulation says: “Testing in vertebrates only where no other methods are available”. By applying Article 62, it is possible to meet the data requirements without performing animal testing. For acute oral, dermal and inhalation toxicity, HSE applies the calculation method for mixtures of the CLP (Classification, Labelling and Packaging) Regulation; for skin and eye irritation HSE encourages the use of in vitro OECD test methods and OECD IATAs; for skin sensitisation HSE recommends the use of the recently adopted in vitro OECD defined approaches; and for dermal absorption HSE requires the in vitro skin absorption (OECD 428) method.

178. HSE has also issued guidance to applicants on Meeting the requirements for toxicological information in applications for authorisation of Plant Protection Products under Regulations (EC) No 1107/2009 and (EC) No 284/2013 where these alternative approaches are explained.

179. The speaker then went into brief details for each of the examples.

180. For acute oral toxicity, classification of mixtures can be determined using the additivity formula of CLP by calculating the acute toxicity estimate (ATE) of a mixture. This is based on the available acute oral toxicity data on each component of the mixture and their respective concentration in the mixture.

181. For skin corrosion / irritation, the OECD Guidance Document on an Integrated Approach on Testing and Assessment (IATA) for Skin Corrosion and Irritation which was published in 2014 can be used.

182. The IATA provides a framework guiding users on testing and assessing skin irritation for:

- Classifications & Labelling decisions.

- Weight of evidence (WoE).

- Approach to testing.

- Further information requirements.

183. For eye damage / irritation, the first approach was published in 2017 and then updated in 2019. The eye irritation IATA framework provides similar guidance to that of the skin irritation IATA. However, at the time of publication in vitro methods could identify only chemicals that are classified as corrosive (Category 1 (H318)) or non-classified (non-irritant), whilst none could identify category 2 eye irritants, therefore a WoE assessment and occasionally an animal test was required. The OECD published last year a new eye irritation in vitro method and this one has been recommended as a full replacement of the in vivo test. It is called the SkinEthic™ Human Corneal Epithelium (HCE) Time-to-Toxicity (TTT) test. It is able to correctly identify chemicals (both substances and mixtures) in the 3 CLP classification categories. The Guidance Document No. 263 on the IATA can be consulted for further testing with other adequate in vitro tests, if deemed necessary.

184. For skin sensitisation the OECD published in 2021 a Guideline (No. 497) using Defined Approaches, which are based on in vitro, in chemico and in silico tools. Guideline 497 is the first internationally harmonised guideline to describe a non-animal approach that can be used to replace an animal test to identify skin sensitisers. Defined approaches are slightly different from IATAs as they define a set of information sources to derive a prediction without the need for expert judgment. Results from multiple information sources can be used together in defined approaches to achieve an equivalent or better predictive capacity than that of the animal tests to predict responses in humans. One example of a defined approach is based on a combination of in vitro/in chemico tests to address some of the key events of the AOP for skin sensitisation. This is then combined with a (Q)SAR prediction. A score is then obtained which allows the prediction of the skin sensitisation hazard potential and potency sub-categorisation of the substance/mixture according to the United Nations Globally Harmonized System (GHS) of Classification and Labelling of Chemicals.

185. The speaker then discussed HSE activities and application of NAMs to pesticide active substance assessments. HSE is currently working at international level to develop a waiver for the dog study. HSE is also working with the UK Committee on Carcinogenicity to develop a new testing strategy for the carcinogenicity assessment of chemicals which is currently based on two assays (one in rats and one in mice) using a large number of animals. HSE supports greater use of read-across between active substances, with possible augmentation obtained through the application of omics technology. In addition, HSE encourages an increased use of (Q)SAR for metabolites and impurities to fill data gaps. Another application of NAMs is the use of the Threshold of Toxicological Concern (TTC) Cramer Class III value in the relevance assessment of groundwater metabolites (guidance issued on the website) and the use of the TTC approach for plant and livestock metabolites for the purposes of the Residue Definition for risk assessment. HSE is also working towards increasing its engagement with stakeholders at national and international level on NAMs.

Regulated Products UK

186. Ms Ruth Willis presented an overview of regulated products risk assessment. The role of the FSA is ensuring food you can trust via the organisations core values which are: food is safe; food is what is says it is; and food is healthier and more sustainable.

187. Following EU Exit, the FSA and Food Standards Scotland as the regulators are responsible for approval of all food and feed regulated products in Scotland, England, Wales and Northern Ireland. FSA follow a robust risk analysis process to deliver independent evidence-based advice and recommendations to ministers.

188. As a regulator there is a balancing act between consumer and industry’s desire for new foods and safety and proportionate oversight. Therefore, the FSA ambition is to aim to be a proportionate and agile regulator supporting food innovation while maintaining food safety standards. FSA will look to get the balance right in terms of how much information we need from business applicants to be able to conduct risk assessments and deliver robust and timely regulatory decisions.

189. Regulated products approvals are delivered in partnership with a lot of government departments and scientific advisory committees. The steps in the regulatory framework include: